I recently had some Zoom Room Macs that needed some automation love and I thought I’d share how you can enable Automatic Login via script, perhaps you have several dozen and use Jamf or some other Mac management tool? Interested? Read on! Currently what’s out there are either standalone encoders only or part of larger packaging workflow to create a new user. The standalone encoders lacked some niceties like logging or account password verification and the package method added the required dependency of packaging if any changes were required. Above all, every script required Python, perl or Ruby, which are all on Apple’s hit list for removal in an upcoming OS release. For now macOS Monterey still has all of these runtimes but there will come a day when macOS won’t and will you really want to add 3rd party scripting runtimes and weaken your security by increasing attack surface when you can weaken your security using just shell? 😜 So for some fun, I re-implemented the /etc/kcpassword encoder in shell so it requires only awk, sed, and xxd, all out of the box installs. I also added some bells and whistles too.

Some of the features are:

- If the username is empty, it will fully disable Automatic Login. Since turning it off via the System Preferences GUI does not remove the /etc/kcpassword file if it has been enabled (!)

- Ensures the specified user exists

- Verifies the password is valid for the specified user

- Can handle blank passwords

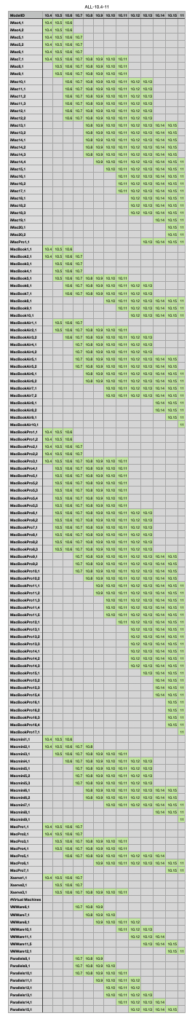

- Works on OS X 10.5+ including macOS 12 Monterey

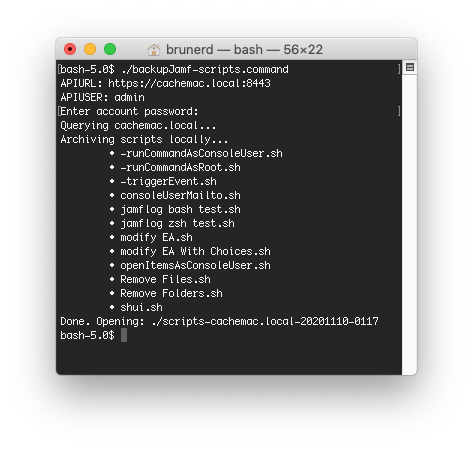

For the Jamf admin the script is setAutomaticLogin.jamf.sh and for standalone usage get setAutomaticLogin.sh, both take a username and password, in that order and then enable Automatic Login if it all checks out. The difference with the Jamf script is that the first parameter is ${3} versus ${1} for the standalone version.

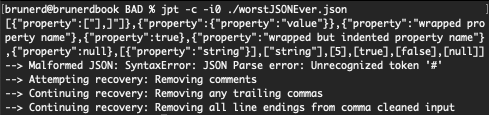

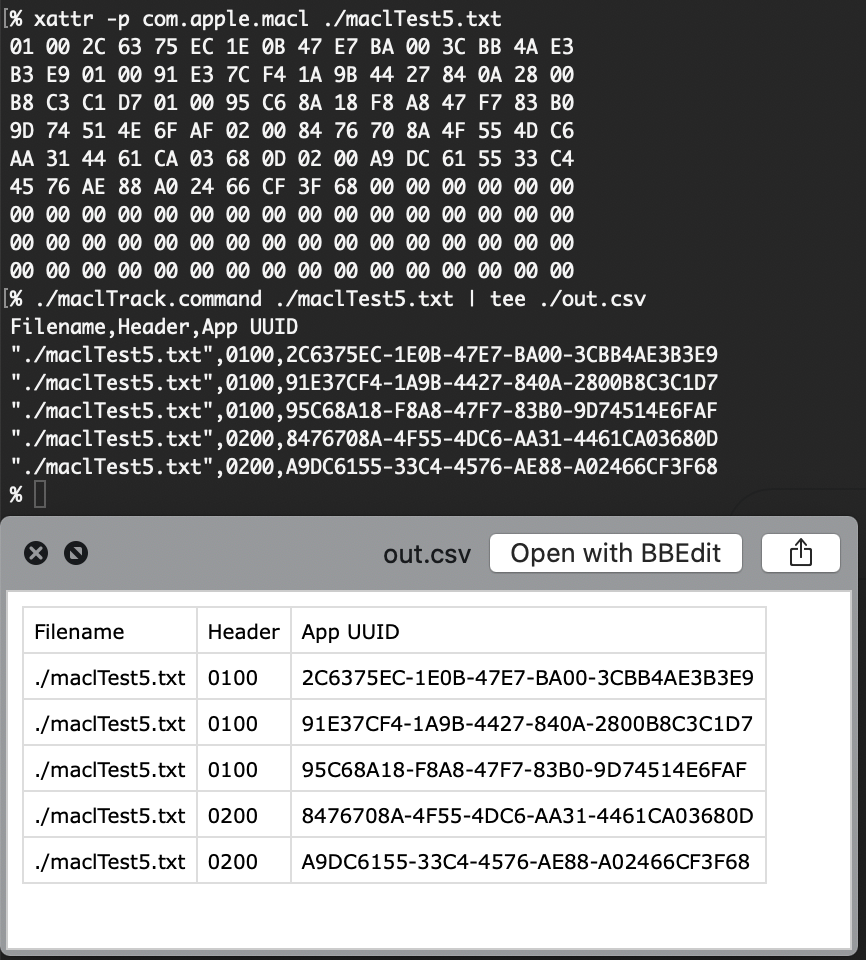

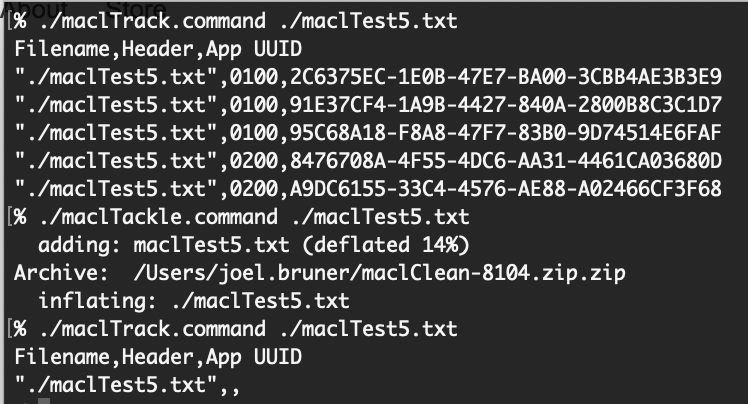

Also here’s a well commented Gist as a little show and tell for what the shell only version of the kcpassword encoder looks like. Enjoy!

Update: I meant to expound on how it didn’t seem that padding kcpassword to a length multiple of 12 was necessary, since it had been working fine on the versions I was testing with but then I tested on Catalina (thinking of this thread) and was proven wrong by Catalina. I then padded with 0x00 with HexFiend in a successful test but was reminded that bash can’t handle that character in strings, instead I padded with 0x01, which worked on some macOS versions but not others. Finally, I settled on doing on what macOS does somewhat, whereas it pads with the next cipher character then seemingly random data, I pad with the cipher characters for the length of the padding. This works for Catalina and other of macOS versions. 😅

Big Hat Tip to Pico over at MacAdmins Slack for pointing out this issue in pycreateuserpkg where it’s made clear that passwords with a lengths of 12 or multiples thereof, need another 12 characters of padding (or in some newer OSes at least one cipher character terminating the kcpassword data, thanks! 👍